It has to be your full-time job just trying to keep up with this technology at this point...It's constantly evolving and improving at a rate that I don't think we've seen in technology before.

At its core, AI’s breakneck pace has become an issue of direction for most companies. With major breakthroughs virtually daily at this point, companies are facing existential questions surrounding how to keep up, where to go next and how to get there.

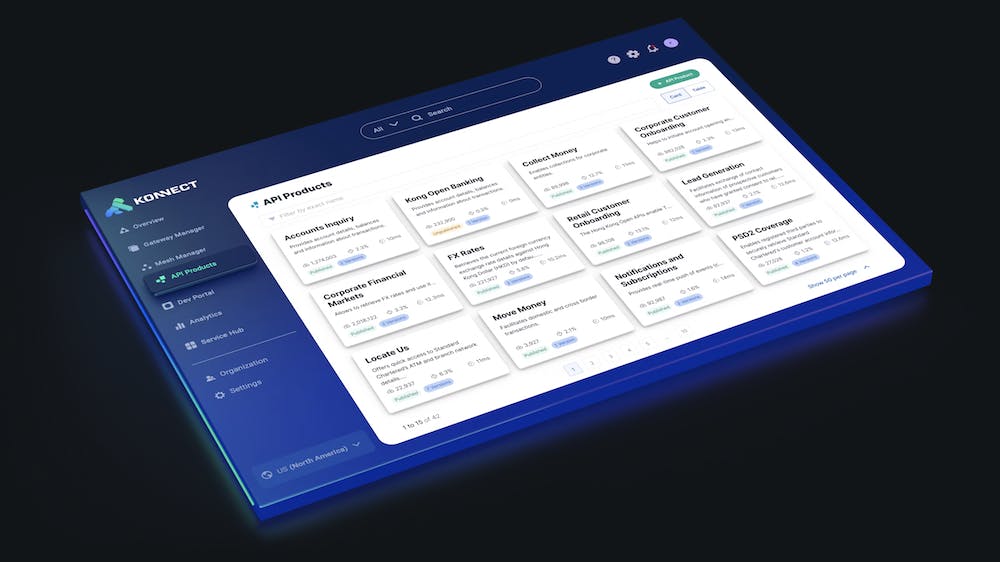

Behdad Ebadifar, Director of Sales Engineering with Kong, a semantic AI gateway that runs and secures LLM traffic, offers insights into the difficult questions businesses must address—which AI models to choose, how to manage agents, and how to keep an updated strategy—now that AI is racing ahead faster than the collective can keep up with.

Unprecedented speed: "It has to be your full-time job just trying to keep up with this technology at this point," says Ebadifar, highlighting the challenge of staying current. "You need to be an expert to really understand all of the various updates. It's constantly evolving and improving at a rate that I don't think we've seen in technology before."

With no signs of slowing down, pressure mounts for businesses. "We know that the rate will continue. There might be some consolidation, there might be certain technologies where you hit critical speed. But for the most part right now we're still in free fall, where we're still accelerating," Ebadifar explains.

The case for agnosticism: Every new model opens up questions about ‘best fit’ for strategy and growth. But when it comes to selecting AI models and technologies, Ebadifar advocates for a flexible approach. "You don't know what model will be best for which future situation," Ebadifar explains. "Will it be the small model or the big model? Will it be OpenAI? Will it be Mistral? Will you be using Anthropic? The developers might have different scenarios that you haven't seen or thought of yet, so it's important to remain model agnostic.”

AI strategy as a top priority: For enterprise leaders, the question of AI strategy has become inescapable. "Almost every conversation with enterprises includes an initiative where they're asking 'What is our AI strategy?'" Ebadifar reports. "This is front of mind for everyone I talk to, every CTO I talk to."

For some organizations, AI strategy becomes an in-house endeavor, where companies race to develop their own AI solutions versus relying on paid external ones.

Almost every conversation with enterprises includes an initiative where they're asking 'What is our AI strategy?' This is front of mind for everyone I talk to, every CTO I talk to.

Internal Agent Development: Currently, Ebadifar observes a shift toward organizations wanting to create their own AI agents rather than relying exclusively on third-party solutions.

"What we're seeing in real time is the ability to create our own agents," he says. While chatbots represented the first iteration of this technology, enterprises are now exploring more sophisticated applications. "A lot of companies I talk to have initiatives where different departments want to build models on their documentation and then have a chatbot front end, all internal."

The next frontier involves more complex agent ecosystems. "If the real power of this technology is going to be in what we call agents — little worker nodes doing their own tasks — we need to focus on how to make those workers better, how to understand what they're doing, and how to iterate from a management perspective," Ebadifar explains.

This could include hierarchical agent structures with "manager agents managing the sub-agents and doing A/B testing and iteration on new function calls."

Guardrails: Ebadifar emphasizes the importance of establishing appropriate safeguards should they develop internal AI. "You have to make sure you're putting up those safety guards because developers are going to use it. It's going to get into your systems."

The challenge becomes implementing "proper governance and security policies around AI while staying flexible and allowing developers and engineers to use it" across applications, features, process automation, and agentic workflows. "You need to make sure you're agnostic and a bit future-proofed," he advises.